The SAP Databricks Alliance is Truly Significant

SAP recently announced what they called a “landmark partnership” with Databricks. This really is very significant. Every ten years or so, there is a technology that truly shakes up the enterprise and supply chain software markets. Vendors that embrace the new technology take market share. Those that don’t struggle. We may be at that inflection […] The post The SAP Databricks Alliance is Truly Significant appeared first on Logistics Viewpoints.

SAP recently announced what they called a “landmark partnership” with Databricks. This really is very significant. Every ten years or so, there is a technology that truly shakes up the enterprise and supply chain software markets. Vendors that embrace the new technology take market share. Those that don’t struggle. We may be at that inflection point.

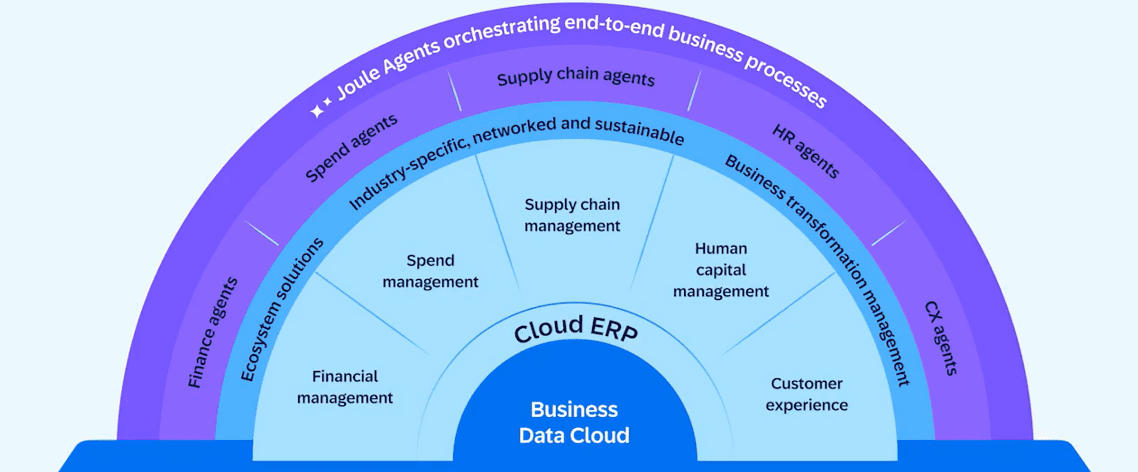

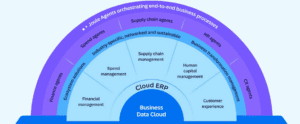

SAP is the world’s largest provider of business software. Databricks offers a Data Intelligence Platform. Databricks type of solution is increasingly being called a “data fabric” or a data platform built on “data fabric principles.”

A data fabric does not store data itself; it connects and provides access to data from diverse sources without physically moving or duplicating it. This is an impressive feat that sounds almost magical. A data fabric speeds and simplifies access to data assets across the business. It accesses, transforms, and harmonizes data from multiple sources to make it usable and actionable for various business use cases. When data is decoupled from applications, it can be created once and moved seamlessly, in real-time, to where it is needed.

Data fabrics rely on knowledge graphs to contextualize data. Contextualization is the process of identifying and representing relationships between data to mirror the relationships that exist between data elements in the physical world.

A knowledge graph creates relationships across data sources. SAP recently released a knowledge graph. Knowledge graphs “weave” together previously unconnected data, often existing in different applications or data lakes, and by doing this, often uncover hidden patterns and relationships, patterns no human could detect.

Descending the Hype Curve

Why does this matter? We are starting to get clarity about the most advanced form that enterprise AI will take, as well as the necessary steps and technologies to get there. We are descending the AI hype curve.

As my colleague Colin Masson pointed out – Colin is ARC Advisory Group’s expert on industrial use cases for AI – at ARC’s Industry Leadership Forum in 2024, end users were largely observers who were eager to learn about things like Gen AI, agents, and industrial use cases for using AI. The shift was “palpable” at ARC’s 2025 Leadership Forum. Companies like Celanese were able to point to advanced uses of AI that were providing significant ROI.

The advanced use case for AI is to create an enterprise-wide orchestration of work. This work is supported by a genuinely advanced co-pilot capable of surfacing precisely the information a worker needs, with the proper context, just when needed!

One of the core principles of supply chain management is breaking down silos. But cross-functional cooperation is not just a need for the supply chain departments, it is needed across the enterprise.

No large company relies on applications from just one company. Even companies that rely on SAP may have different instances of SAP in different business units or regions. Even in the same instance of an ERP solution, it is not always easy to get information to flow seamlessly across applications to where it is needed. Finally, external and unstructured data must often be collected and contextualized to make better decisions.

The AI Journey

For this advanced vision of using AI for enterprise-wide orchestration, a company needs to clean and harmonize the data. The data fabrics support this. Then, Agentic AI is employed to solve distinct problems. Agentic AI is a group of agents working together. These agents don’t all need to be based on AI. Sometimes, a microservice within an application might be the agent. In some cases, math is applied to data to provide an answer, and in some cases, agents do need to rely on forms of artificial intelligence like machine learning.

These agents help to solve distinct problems. To fully orchestrate all work across an enterprise might require creating thousands of agents. SAP’s platform supports the creation of agents. Then the agents are surfaced to workers in the form of a co-pilot type user interface. SAP’s is called Joule.

SAP is, and has been, actively creating agents. For example, ARC was recently briefed on SAP’s transportation management product. SAP co-innovated an agent focused on intelligent cargo receipt with a major auto manufacturer. This manufacturer has about 1000 trucks per day arriving at their biggest plant. Of those, about 20% show up unexpectedly. These truck shipments did not use an advanced shipping notice like they were supposed to. The guard would then have to spend upwards of an hour going through paperwork to determine whether the shipment was needed and then create a digital record so the receiving process could continue.

Now the AI agent can scan those documents, and then the large language model has been trained to look at and find all the relevant information- the origin, the destination, the product, and the quantity. That information is used to dynamically create a consignment within SAP’s transportation management system, which then can be used to continue the receiving process. SAP’s TM product managers told us that receiving time per truck has decreased from an hour for these discrepancy shipments to about 15 minutes.

However, the advantage of the Databricks relationship is that there is a vastly improved ability to create agents across a heterogeneous data environment. There is the ability to create agents in the plethora of gray spaces that exist between applications and processes. In short, this technology can create a genuinely advanced orchestration layer across the enterprise.

This Is Not an Easy Journey

What we heard at our user forum is that creating the initial agents can be a struggle. But then companies get better at it, and the pace of agent creation can rapidly increase.

Hallucination is rare when a company’s own data is used to create a large language model. But they still occur, and they must be detected and fixed.

Creating agents that connect the factory floor with the rest of the enterprise won’t be easy. ARC classifies data fabrics as falling into two categories, one type is mainly for transactional data found in applications. This is an enterprise data graph. ARC classifies Databricks as offering this type of solution.

A second type of data graph is for the profoundly messy data found on the factory floor, which ARC calls an industrial data graph. The copilot agents used on the factory floor are currently created with industrial data fabrics.

Finally, cleaning, harmonizing, and contextualizing data is hard. The data fabric and knowledge graph greatly assist with this, but building this foundation will still be an onerous multi-year job for most companies. In short, there is not going to be rapid ROI for these projects.

Companies need to think differently about ROI for this vision of AI. They need to think in a ten-year horizon and consider all the value that could be created across the enterprise if they could truly unlock their data and provide advanced decision support. Most will find the potential value to be massive, but it is, in part, a voyage of faith.

The post The SAP Databricks Alliance is Truly Significant appeared first on Logistics Viewpoints.

.jpg)