AutoGen, Eliza, Aider

I got around to building what they call AI agents. This is a report on the explorations of the toolsets. Half my intention here is to make use of code assistants to do the bulk of the work here. Aider has been living on my machine more than half a year; it's time to make it earn its place. Aider has been steadily improving, updates are pushed frequently. It's an undiscovered gem obscured by hypes like Cursor. But putting it to use is like learning to a new musical instruments, all while having access to the instrument you're good at (handcrafting code). Telling Aider what to do is just not as natural as writing code by hand; every so often in the process I revert to writing code myself. I have to actively fight this urge. At this point, Aider has this feature that monitor for comments in files and make changes in real-time. On top of that Aider reads documentations when given URLs. Both of these make it game-changing. Onto making agents. The instinct is to pick a framework that makes everything easy. But I've been around the block. If you intend for what you build to have a decent lifespan, frameworks should be approached with heavy dose of caution. Framework-less development means not having your fate handed to other people's hands. But it also means repeating mistakes that has been made by others. A healthy use of frameworks is to have them be responsible for the right layers of abstractions; things that you have no interests in tweaking in the future. Problem is you probably have no idea what those things will be when you get started. I was aware of CrewAI a year ago. I was waiting for cool ideas to hit for me to put it use. That did not come. Not that there was no cool idea, but none that warrant the use of a framework to coordinate multiple agents for one single task. @truth_terminal came along, that gave me inspiration. I absolutely don't have to build anything useful. In fact, trying to be useful is recipe for mindblocks. What I now want is simple: three unpredictable off-the-wall LLM agents talking to each other. I want to see if they are capable of reaching upon insights that are new to me. This feels promising. Now, creating one single agent is trivial. I write arbitrary ones that run from within Emacs all the time. The Eliza framework is newly popular, the results look promising. I built two agents/characters running on it. The process of creating an agent with lores and specific knowledge was rather involved. It's like a five-page form that you have to fill in the format of a JSON file. I randomly generated most of these details, even that took me half an hour to craft a character. The upside is it's an entirely creative process of character creation, void of any engineering concern. The inner workings of how these character details eventually got sent to LLMs are abstracted away by Eliza. The end result is impressive, the characters do speak in personalities consistent with what's configured. If you intend for it to sound quirky, given enough sample texts and background stories, it does come out sounding quirky. In fact that's Eliza's bread and butter: create personalities. You know, as opposed to getting things done (being useful). Nothing wrong with that. Until I want to have two characters talking to each other. There's just no way to it unless I'm willing to have the conversations take place publicly on Twitter. At least in this iteration, Eliza is simply built to run one single awesome Twitter chatbot. It feels like the fact that Eliza being written in Typescript is the draw by itself. If this is true, I find this suspicious. The quality of the framework should stand on its own, not its choice of ecosystem. Many products in the Javascript world has this quality. That said, the amount of plugins being made for Eliza is impressive as a social phenomenon. Plugins are responsible for giving agents abilities beyond just talking. If Eliza were to have a long shelf-life, these plugins will be the factor. However, how lindy is Eliza remains to be seen. From what I've digged around in its innards, Eliza feels overrated. That's an ironic statement. In the interest of giving you an idiot-proof method for creating an autonomous-KOL, it does a lot on your behalf. It does too much, I may say. If your use case goes just a little off their course, there's no easy way but to hack the framework. Subsequent research brought me to AutoGen. It does not try to do too much; not being opinionated is part of its deal. Most of all, it's trivial in AutoGen to have multiple agents talk to each other. They've got the whole team-infrastructure figured out. Once I've tried it, it's not as intuitive to create characters that speak as coherently as Eliza-made characters. Tweaking characters' behaviors took repeated prompt-engineering. But I am better off tweaking prompts in English than wrangling a framework to get what I want. AutoGen was created to get things done. It came with agents that brow

I got around to building what they call AI agents. This is a report on the explorations of the toolsets.

Half my intention here is to make use of code assistants to do the bulk of the work here. Aider has been living on my machine more than half a year; it's time to make it earn its place.

Aider has been steadily improving, updates are pushed frequently. It's an undiscovered gem obscured by hypes like Cursor.

But putting it to use is like learning to a new musical instruments, all while having access to the instrument you're good at (handcrafting code).

Telling Aider what to do is just not as natural as writing code by hand; every so often in the process I revert to writing code myself. I have to actively fight this urge.

At this point, Aider has this feature that monitor for comments in files and make changes in real-time. On top of that Aider reads documentations when given URLs. Both of these make it game-changing.

Onto making agents. The instinct is to pick a framework that makes everything easy.

But I've been around the block. If you intend for what you build to have a decent lifespan, frameworks should be approached with heavy dose of caution.

Framework-less development means not having your fate handed to other people's hands. But it also means repeating mistakes that has been made by others.

A healthy use of frameworks is to have them be responsible for the right layers of abstractions; things that you have no interests in tweaking in the future. Problem is you probably have no idea what those things will be when you get started.

I was aware of CrewAI a year ago. I was waiting for cool ideas to hit for me to put it use. That did not come. Not that there was no cool idea, but none that warrant the use of a framework to coordinate multiple agents for one single task.

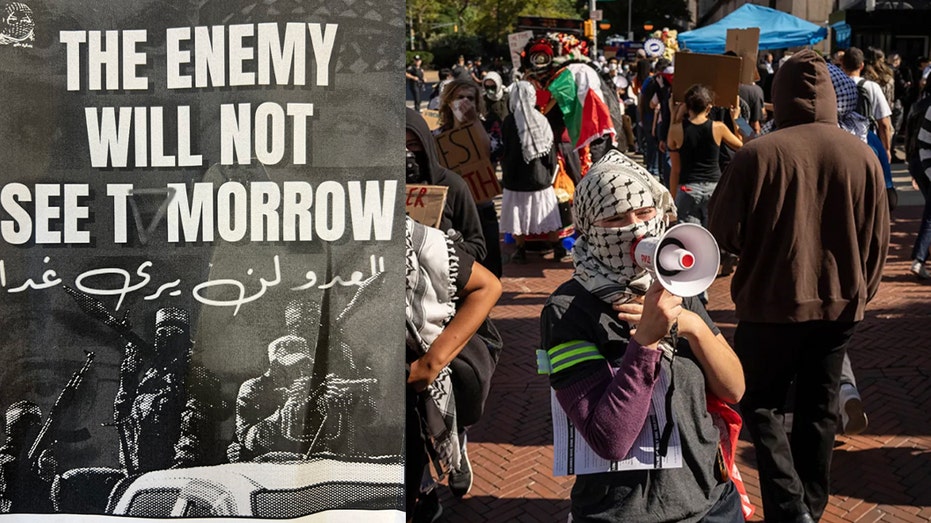

@truth_terminal came along, that gave me inspiration. I absolutely don't have to build anything useful. In fact, trying to be useful is recipe for mindblocks.

What I now want is simple: three unpredictable off-the-wall LLM agents talking to each other. I want to see if they are capable of reaching upon insights that are new to me. This feels promising.

Now, creating one single agent is trivial. I write arbitrary ones that run from within Emacs all the time.

The Eliza framework is newly popular, the results look promising. I built two agents/characters running on it. The process of creating an agent with lores and specific knowledge was rather involved. It's like a five-page form that you have to fill in the format of a JSON file.

I randomly generated most of these details, even that took me half an hour to craft a character. The upside is it's an entirely creative process of character creation, void of any engineering concern.

The inner workings of how these character details eventually got sent to LLMs are abstracted away by Eliza. The end result is impressive, the characters do speak in personalities consistent with what's configured. If you intend for it to sound quirky, given enough sample texts and background stories, it does come out sounding quirky.

In fact that's Eliza's bread and butter: create personalities. You know, as opposed to getting things done (being useful). Nothing wrong with that.

Until I want to have two characters talking to each other. There's just no way to it unless I'm willing to have the conversations take place publicly on Twitter. At least in this iteration, Eliza is simply built to run one single awesome Twitter chatbot.

It feels like the fact that Eliza being written in Typescript is the draw by itself. If this is true, I find this suspicious. The quality of the framework should stand on its own, not its choice of ecosystem. Many products in the Javascript world has this quality.

That said, the amount of plugins being made for Eliza is impressive as a social phenomenon. Plugins are responsible for giving agents abilities beyond just talking. If Eliza were to have a long shelf-life, these plugins will be the factor.

However, how lindy is Eliza remains to be seen. From what I've digged around in its innards, Eliza feels overrated.

That's an ironic statement. In the interest of giving you an idiot-proof method for creating an autonomous-KOL, it does a lot on your behalf. It does too much, I may say. If your use case goes just a little off their course, there's no easy way but to hack the framework.

Subsequent research brought me to AutoGen. It does not try to do too much; not being opinionated is part of its deal.

Most of all, it's trivial in AutoGen to have multiple agents talk to each other. They've got the whole team-infrastructure figured out.

Once I've tried it, it's not as intuitive to create characters that speak as coherently as Eliza-made characters. Tweaking characters' behaviors took repeated prompt-engineering. But I am better off tweaking prompts in English than wrangling a framework to get what I want.

AutoGen was created to get things done. It came with agents that browse the web, handle files and run code. All the things an autonomous being is expected to do.

As far as I can tell, it does not come with RAG built in. The ready-made browser-agent is also giving me a hard time with Playwright, where it's somehow not installed perfectly on Arch Linux.

AutoGen does not have the social validation of Eliza, but it does have the pocket of Microsoft. I'm more optimistic about AutoGen's lindy-ness.

It's a matter of time before I get my agents to write and run their own code. For now they would have to settle for making trippy conversations among themselves.

Before I get there though, I have this inkling that code written by machines should not be subjected to the same criteria as code written for humans. Though like it or not, LLMs still end up writing code like humans.

But fundamentally, if these are code written autonomously by machines themselves for themselves, there's no reason why code should be arranged in ways that are human-friendly.

It's conceivable to me that machines prefer writing assembly code because it makes more sense to them.

It can be argued that we still want code-legibility as a safeguard for humans to peek at, but ultimately that's a losing battle. That amounts to demanding a new life form to conform to human standards, which itself is tenuous at best.

What's Your Reaction?

_LuckyStep48_Alamy.jpg?#)