What’s Next for the KitOps Project

KitOps 1.0: Production Proven Today is an important milestone for the KitOps open source project as we release version 1.0. This isn’t just about new features (although automatically creating a ModelKit from Hugging Face or a scanned directory is exciting), it’s about the start of a journey for every KitOps user. KitOps is downloaded thousands of times a week and is used in production by global enterprises, secure government agencies, and a slew of smaller (but equally important) organizations. This 1.0 says KitOps has been proven production and by some of the most demanding organizations and it’s ready for whatever you can throw at it! Of course there are new features too thanks to our awesome community of developers, authors, and feedback providers: Generate a ModelKit by importing an existing Hugging Face repository (no need to edit the Kitfile) Generate a Kitfile automatically by scanning a directory structure The config object for ModelKits now stores digests with each layer, to make it easier to access a specific layer's blob Layers are now packed to include directories relative to the context directory, making unpacking tarballs simpler Tail logs with the -f flag The Kitfile model object now has a format field so you can be explicit about the model’s serialization format (e.g., GGUF, ONNX, etc…) Despite the major version change, KitOps 1.0 is backward compatible. Before What’s Next, What Started It? KitOps started with a simple question: “why isn’t there an open standard for packaging an AI/ML project so that it can be imported or exported into any tool or environment?” Every AI/ML tool has its own packaging format and approach, making moving projects from tool-to-tool (or worse yet, replacing one vendor’s tool with another) less fun than Carrie’s high school prom. So why wasn’t there a standard already? The answer was because tool and infrastructure vendors don’t like choice - they want customers locked into their formats and tools. Sounds great to investors, but ultimately it’s always a losing strategy because users will always need choices. That’s why PDF, GIF, Markdown, and other open standards are…well standards! Luckily, the original KitOps authors were all readers of xkcd, so we didn’t create a 15th standard, instead we built KitOps to allow teams to share and reproduce their AI/ML projects using the existing OCI standard (the container one). But…we didn’t want to just jam models, datasets, codebases, and documentation into a single container image because not everyone needs everything to do their part of the project lifecycle. Instead we packaged everything as an OCI Artifact called a ModelKit that includes models, datasets, codebases, and documentation in separate layers. We then built the Kit CLI to make it trivial for people or pipelines to pull only those layers they need to do their job. Teams using KitOps are seeing big improvements in security and reduction of risk, plus a 31.9% decrease in the time it takes to take an AI/ML project from conception to completion: KitOps stores, versions, and secures their AI/ML projects in the same place their containers are already kept with no additional infrastructure (everyone has a container registry already) KitOps ModelKits are tamper-proof, secure, and can be digitally signed KitOps ModelKits can be used with existing MLOps and DevOps tools without any changes KitOps selective unpacking reduces time and space needed to deploy and reproduce AI/ML projects KitOps packages are easy to turn into runnable containers or Kubernetes deployments What’s Next for KitOps? KitOps was part of a larger goal - to establish an open standard for packaging AI/ML projects that will be adopted by all vendors. We’ve made two important steps on that road: *1/ Drafted a CNCF-sanctioned model packaging specification * The KitOps maintainers along with members of Red Hat, ANT Group, ByteDance, and Jozu have discussed and drafted a new specification that would give OCI registries and runtimes a consistent format and interfaces for handling AI/ML projects. This CNCF standard would simplify sharing and reproducing AI/ML projects in Kubernetes and its associated projects. 2/ Submitted KitOps to the CNCF as a sandbox project We have submitted KitOps as a sandbox project to the CNCF, and expect to have it approved in Q1 2025. This will make KitOps community property and make it simpler for other vendors to add KitOps integrations in their products. It’s always hard letting something go out into the world that you’ve built with love and care, but we’re more excited to see how you, the AI/ML community, adopt and grow KitOps! -Brad Micklea, KitOps Project Lead & Jozu CEO Join the KitOps community For support, release updates, and general KitOps discussion, please join the KitOps Discord. Follow KitOps on X for daily updates. If you need help there are several ways to reach our community and Maintainers

KitOps 1.0: Production Proven

Today is an important milestone for the KitOps open source project as we release version 1.0. This isn’t just about new features (although automatically creating a ModelKit from Hugging Face or a scanned directory is exciting), it’s about the start of a journey for every KitOps user. KitOps is downloaded thousands of times a week and is used in production by global enterprises, secure government agencies, and a slew of smaller (but equally important) organizations.

This 1.0 says KitOps has been proven production and by some of the most demanding organizations and it’s ready for whatever you can throw at it!

Of course there are new features too thanks to our awesome community of developers, authors, and feedback providers:

- Generate a ModelKit by importing an existing Hugging Face repository (no need to edit the Kitfile)

- Generate a Kitfile automatically by scanning a directory structure

- The config object for ModelKits now stores digests with each layer, to make it easier to access a specific layer's blob

- Layers are now packed to include directories relative to the context directory, making unpacking tarballs simpler

- Tail logs with the

-fflag - The Kitfile

modelobject now has aformatfield so you can be explicit about the model’s serialization format (e.g., GGUF, ONNX, etc…)

Despite the major version change, KitOps 1.0 is backward compatible.

Before What’s Next, What Started It?

KitOps started with a simple question:

“why isn’t there an open standard for packaging an AI/ML project so that it can be imported or exported into any tool or environment?”

Every AI/ML tool has its own packaging format and approach, making moving projects from tool-to-tool (or worse yet, replacing one vendor’s tool with another) less fun than Carrie’s high school prom. So why wasn’t there a standard already? The answer was because tool and infrastructure vendors don’t like choice - they want customers locked into their formats and tools. Sounds great to investors, but ultimately it’s always a losing strategy because users will always need choices. That’s why PDF, GIF, Markdown, and other open standards are…well standards!

Luckily, the original KitOps authors were all readers of xkcd, so we didn’t create a 15th standard, instead we built KitOps to allow teams to share and reproduce their AI/ML projects using the existing OCI standard (the container one). But…we didn’t want to just jam models, datasets, codebases, and documentation into a single container image because not everyone needs everything to do their part of the project lifecycle.

Instead we packaged everything as an OCI Artifact called a ModelKit that includes models, datasets, codebases, and documentation in separate layers. We then built the Kit CLI to make it trivial for people or pipelines to pull only those layers they need to do their job.

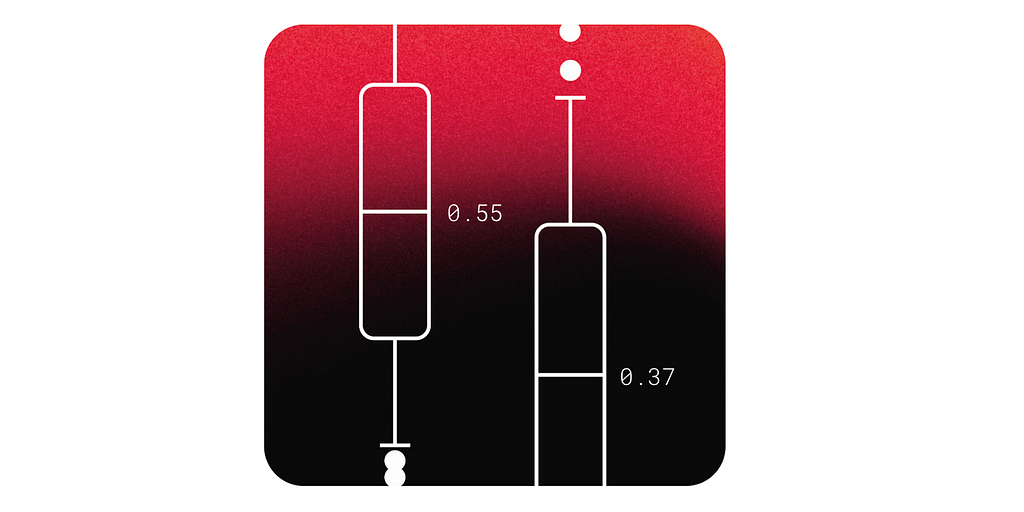

Teams using KitOps are seeing big improvements in security and reduction of risk, plus a 31.9% decrease in the time it takes to take an AI/ML project from conception to completion:

- KitOps stores, versions, and secures their AI/ML projects in the same place their containers are already kept with no additional infrastructure (everyone has a container registry already)

- KitOps ModelKits are tamper-proof, secure, and can be digitally signed

- KitOps ModelKits can be used with existing MLOps and DevOps tools without any changes

- KitOps selective unpacking reduces time and space needed to deploy and reproduce AI/ML projects

- KitOps packages are easy to turn into runnable containers or Kubernetes deployments

What’s Next for KitOps?

KitOps was part of a larger goal - to establish an open standard for packaging AI/ML projects that will be adopted by all vendors. We’ve made two important steps on that road:

*1/ Drafted a CNCF-sanctioned model packaging specification *

The KitOps maintainers along with members of Red Hat, ANT Group, ByteDance, and Jozu have discussed and drafted a new specification that would give OCI registries and runtimes a consistent format and interfaces for handling AI/ML projects. This CNCF standard would simplify sharing and reproducing AI/ML projects in Kubernetes and its associated projects.

2/ Submitted KitOps to the CNCF as a sandbox project

We have submitted KitOps as a sandbox project to the CNCF, and expect to have it approved in Q1 2025. This will make KitOps community property and make it simpler for other vendors to add KitOps integrations in their products.

It’s always hard letting something go out into the world that you’ve built with love and care, but we’re more excited to see how you, the AI/ML community, adopt and grow KitOps!

-Brad Micklea, KitOps Project Lead & Jozu CEO

Join the KitOps community

For support, release updates, and general KitOps discussion, please join the KitOps Discord. Follow KitOps on X for daily updates.

If you need help there are several ways to reach our community and Maintainers outlined in our support doc

Reporting Issues, Suggesting Features, and Contributing

Your insights help KitOps evolve as an open standard for AI/ML. We deeply value the issues and feature requests we get from users in our community. To contribute your thoughts,navigate to the Issues tab and hitting the New Issue green button. Our templates guide you in providing essential details to address your request effectively.

We love our KitOps community and contributors. To learn more about the many ways you can contribute (you don't need to be a coder) and how to get started see our Contributor's Guide. Please read our Governance and our Code of Conduct before contributing.

A Community Built on Respect

At KitOps, inclusivity, empathy, and responsibility are at our core. Please read our Code of Conduct to understand the values guiding our community.

Roadmap

We share our roadmap openly so anyone in the community can provide feedback and ideas. Let us know what you'd like to see by pinging us on Discord or creating an issue.

What's Your Reaction?

![[FREE EBOOKS] Hacking and Securityy, The Kubernetes Book & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)