Pgvectorscale: 28x lower p95 latency than Pinecone, According To Timescale

I will share Timescale's benchmark blog post attracted my attention: ( You can read the full article from Timescale Blog ) Introducing pgvectorscale, a new open-source extension that makes PostgreSQL an even better database for AI applications. Pgvectorscale builds upon pgvector to unlock large-scale, high-performance AI use cases previously only achievable with specialized vector databases like Pinecone. When building an AI application, many developers ask themselves, “Do I need a standalone vector database, or can I just use a general-purpose database I already have and know?” And while general-purpose databases like PostgreSQL have gained popularity for vector storage and search thanks to their familiarity and extensions like pgvector, the one argument for opting to use a dedicated vector database, like Pinecone, has been the promise of greater performance. The reasoning goes like this: dedicated vector databases have purpose-built data structures and algorithms for storing and searching large volumes of vector data, thus offering better performance and scalability than general-purpose databases with added vector support. We built pgvectorscale to make PostgreSQL a better database for AI and to challenge the notion that PostgreSQL and pgvector are not performant for vector workloads. Pgvectorscale brings such specialized data structures and algorithms for large-scale vector search and storage to PostgreSQL as an extension, helping deliver comparable and often superior performance than specialized vector databases like Pinecone. Pgvectorscale: High-performance, cost-efficient scaling for large vector workloads on PostgreSQL Pgvectorscale is an open-source PostgreSQL extension that builds on pgvector, enabling greater performance and scalability (keep reading for the actual numbers). By using pgvector and pgvectorscale, developers can build more scalable AI applications, benefiting from higher-performance embedding search and cost-efficient storage. Licensed under the open-source PostgreSQL License, pgvectorscale complements pgvector by leveraging the pgvector data type and distance functions, further enriching the PostgreSQL ecosystem for building AI applications. While pgvector is written in C, the pgvectorscale extension is written in Rust, giving the community a new avenue to contribute to vector support in PostgreSQL. Pgvectorscale builds on pgvector with two key innovations: StreamingDiskANN vector search index: StreamingDiskANN overcomes limitations of in-memory indexes like HNSW (hierarchical navigable small world) by storing part of the index on disk, making it more cost-efficient to run and scale as vector workloads grow. Inspired by research at Microsoft and then improved on by Timescale researchers, pgvectorscale’s StreamingDiskANN index optimizes pgvector data for low-latency, high throughput search without sacrificing high accuracy. The ability to store the index on disk vastly decreases the cost of storing and searching over large amounts of vectors since SSDs are much cheaper than RAM. Statistical Binary Quantization (SBQ): Developed by researchers at Timescale, this technique improves on standard binary quantization techniques by improving accuracy when using quantization to reduce the space needed for vector storage. We gave a sneak peek of pgvectorscale to a select group of developers who are building AI applications with PostgreSQL. Here’s what John McBride, Head of Infrastructure at OpenSauced, a company using PostgreSQL to build an AI-enabled analytics platform for open-source projects, had to say: “Pgvectorscale is a great addition to the PostgreSQL AI ecosystem. The introduction of Statistical Binary Quantization promises lightning performance for vector search and will be valuable as we scale our vector workload.” Keep reading for an overview of StreamingDiskANN and Statistical Binary Quantization. For an in-depth tour, see our “how we built it” companion post for a technical deep dive into the pgvectorscale innovations. Pgvector vs. Pinecone: Performance impact of pgvectorscale Before “delving” into pgvectorscale’s StreamingDiskANN index for pgvector and its novel approach to binary quantization, let’s briefly unpack our claim that pgvectorscale helps PostgreSQL get comparable and often superior performance than specialized vector databases like Pinecone. To test the performance impact of pgvectorscale, we compared the performance of PostgreSQL with pgvector and pgvectorscale against Pinecone, widely regarded as the market leader for specialized vector databases, on a benchmark using a dataset of 50 million Cohere embeddings (of 768 dimensions each). (We go into detail about the benchmarking methodology and results in this pgvector vs. Pinecone comparison blog post.) PostgreSQL with pgvector and pgvectorscale outperformed Pinecone’s storage-optimized index (s1) with 28x lower p95 latency and 16x higher query throughput for approximate nearest neighbo

I will share Timescale's benchmark blog post attracted my attention: ( You can read the full article from Timescale Blog )

Introducing pgvectorscale, a new open-source extension that makes PostgreSQL an even better database for AI applications. Pgvectorscale builds upon pgvector to unlock large-scale, high-performance AI use cases previously only achievable with specialized vector databases like Pinecone.

When building an AI application, many developers ask themselves, “Do I need a standalone vector database, or can I just use a general-purpose database I already have and know?”

And while general-purpose databases like PostgreSQL have gained popularity for vector storage and search thanks to their familiarity and extensions like pgvector, the one argument for opting to use a dedicated vector database, like Pinecone, has been the promise of greater performance. The reasoning goes like this: dedicated vector databases have purpose-built data structures and algorithms for storing and searching large volumes of vector data, thus offering better performance and scalability than general-purpose databases with added vector support.

We built pgvectorscale to make PostgreSQL a better database for AI and to challenge the notion that PostgreSQL and pgvector are not performant for vector workloads. Pgvectorscale brings such specialized data structures and algorithms for large-scale vector search and storage to PostgreSQL as an extension, helping deliver comparable and often superior performance than specialized vector databases like Pinecone.

Pgvectorscale: High-performance, cost-efficient scaling for large vector workloads on PostgreSQL

Pgvectorscale is an open-source PostgreSQL extension that builds on pgvector, enabling greater performance and scalability (keep reading for the actual numbers). By using pgvector and pgvectorscale, developers can build more scalable AI applications, benefiting from higher-performance embedding search and cost-efficient storage.

Licensed under the open-source PostgreSQL License, pgvectorscale complements pgvector by leveraging the pgvector data type and distance functions, further enriching the PostgreSQL ecosystem for building AI applications. While pgvector is written in C, the pgvectorscale extension is written in Rust, giving the community a new avenue to contribute to vector support in PostgreSQL.

Pgvectorscale builds on pgvector with two key innovations:

- StreamingDiskANN vector search index: StreamingDiskANN overcomes limitations of in-memory indexes like HNSW (hierarchical navigable small world) by storing part of the index on disk, making it more cost-efficient to run and scale as vector workloads grow. Inspired by research at Microsoft and then improved on by Timescale researchers, pgvectorscale’s StreamingDiskANN index optimizes pgvector data for low-latency, high throughput search without sacrificing high accuracy. The ability to store the index on disk vastly decreases the cost of storing and searching over large amounts of vectors since SSDs are much cheaper than RAM.

- Statistical Binary Quantization (SBQ): Developed by researchers at Timescale, this technique improves on standard binary quantization techniques by improving accuracy when using quantization to reduce the space needed for vector storage.

We gave a sneak peek of pgvectorscale to a select group of developers who are building AI applications with PostgreSQL. Here’s what John McBride, Head of Infrastructure at OpenSauced, a company using PostgreSQL to build an AI-enabled analytics platform for open-source projects, had to say:

“Pgvectorscale is a great addition to the PostgreSQL AI ecosystem. The introduction of Statistical Binary Quantization promises lightning performance for vector search and will be valuable as we scale our vector workload.”

Keep reading for an overview of StreamingDiskANN and Statistical Binary Quantization. For an in-depth tour, see our “how we built it” companion post for a technical deep dive into the pgvectorscale innovations.

Pgvector vs. Pinecone: Performance impact of pgvectorscale

Before “delving” into pgvectorscale’s StreamingDiskANN index for pgvector and its novel approach to binary quantization, let’s briefly unpack our claim that pgvectorscale helps PostgreSQL get comparable and often superior performance than specialized vector databases like Pinecone.

To test the performance impact of pgvectorscale, we compared the performance of PostgreSQL with pgvector and pgvectorscale against Pinecone, widely regarded as the market leader for specialized vector databases, on a benchmark using a dataset of 50 million Cohere embeddings (of 768 dimensions each). (We go into detail about the benchmarking methodology and results in this pgvector vs. Pinecone comparison blog post.)

PostgreSQL with pgvector and pgvectorscale outperformed Pinecone’s storage-optimized index (s1) with 28x lower p95 latency and 16x higher query throughput for approximate nearest neighbor queries at 99 % recall.

PostgreSQL with pgvector and pgvectorscale extensions outperformed Pinecone’s s1 pod-based index type, offering 28x lower p95 latency.

PostgreSQL with pgvector and pgvectorscale extensions outperformed Pinecone’s s1 pod-based index type, offering 28x lower p95 latency.

Furthermore, PostgreSQL with pgvectorscale achieves 1.4x lower p95 latency and 1.5x higher query throughput than Pinecone’s performance-optimized index (p2) at 90 % recall on the same dataset. The p2 pod index is what Pinecone recommends if you want the best possible performance, and to our surprise pgvectorscale still helped PostgreSQL outperform it!

Aside: For readers wondering, “What about using the p2 index at 99 % recall?” We thought the same thing, but unfortunately, Pinecone doesn't support the ability to tune your index to control the accuracy performance trade-off, unlike a flexible, more transparent engine like PostgreSQL, which exposes parameters for users to tune while also setting reasonable defaults. We detail this in our companion benchmark methodology post.

PostgreSQL with pgvector and pgvectorscale extensions outperformed Pinecone’s p1 pod-based index type, offering 1.4x lower p95 latency.

PostgreSQL with pgvector and pgvectorscale extensions outperformed Pinecone’s p1 pod-based index type, offering 1.4x lower p95 latency.

This impressive performance, combined with the trusted reliability and continuous evolution of PostgreSQL, makes it clear: building on PostgreSQL with pgvector and pgvectorscale is the smart choice for developers aiming to create high-performing, scalable AI applications.

The cost benefits are equally compelling. Self-hosting PostgreSQL with pgvector and pgvectorscale is 75-79 % cheaper than using Pinecone. Self-hosting PostgreSQL costs approximately $835 per month on AWS EC2, compared to Pinecone’s $3,241 per month for the storage-optimized index (s1) and $3,889 per month for the performance-optimized index (p2).

Self-hosting PostgreSQL with pgvector and pgvectorscale offers better performance while being 75-79 % cheaper than using Pinecone.

Self-hosting PostgreSQL with pgvector and pgvectorscale offers better performance while being 75-79 % cheaper than using Pinecone.

This result puts to bed the claims that PostgreSQL and pgvector are easy to start with but not scalable or performant for AI applications. With pgvectorscale, developers building GenAI applications can enjoy purpose-built performance for vector search without giving up the benefits of a fully featured PostgreSQL database and ecosystem.

And those benefits are numerous. Choosing a standalone vector database would mean you lose out on the full spectrum of data types, transactional semantics, and operational features that exist in a general-purpose database and are often necessary for deploying production apps.

Here’s an overview of how PostgreSQL provides a superior developer experience to standalone vector databases like Pinecone:

- Developers can store vector data alongside multiple other data types—from relational data to geospatial data (with PostGIS) to time series, events, and analytics data (with TimescaleDB).

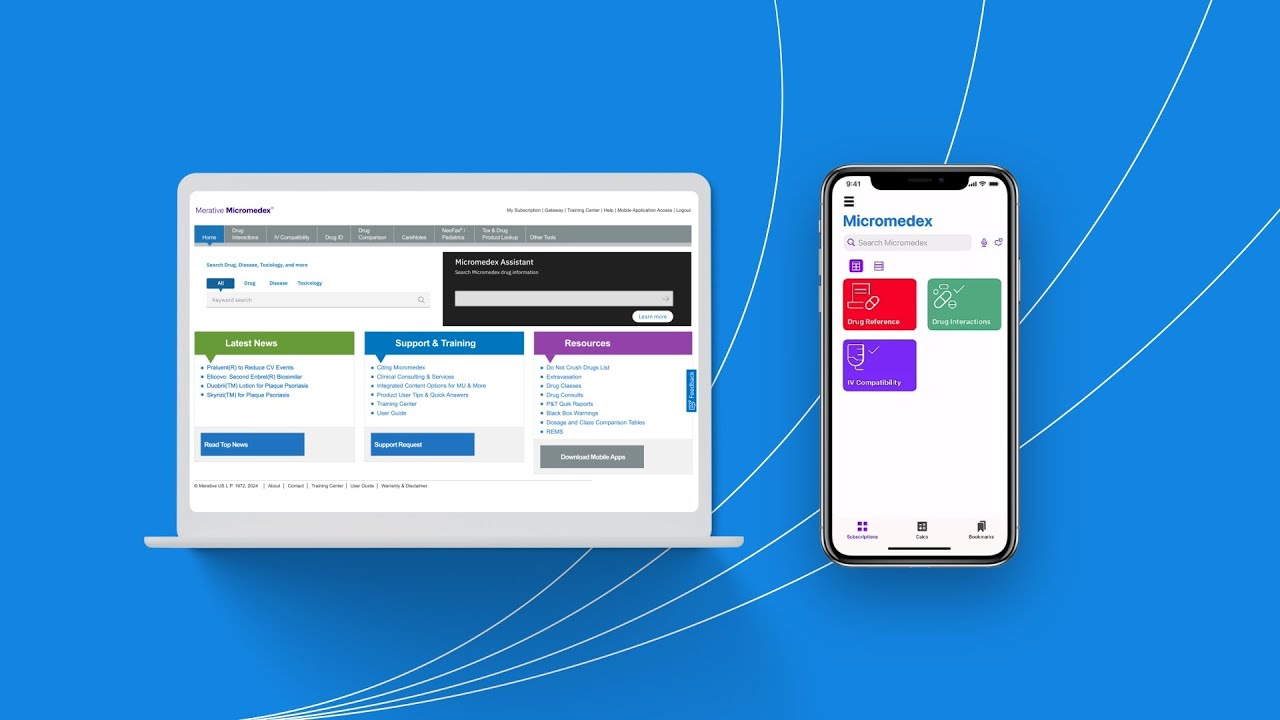

-

Better developer experience with PostgreSQL tooling and a rich ecosystem—think

pg_stat_statementsfor query statistics,EXPLAINplans for debugging slow queries, and the numerous connectors, libraries, and drivers for every other technology in your AI data stack. - PostgreSQL and the SQL query language are well known and documented, enabling faster developer onramp and preventing knowledge silos where expertise is isolated to a few individuals who know an esoteric query language or system.

- Rich support for backups: It supports consistent backups, streaming backups, and incremental and full backups. In contrast, Pinecone only supports a manual operation to take a non-consistent copy of its data called “Collections.”

- Point-in-time recovery for recovering from operator errors.

- High availability for applications that need high-uptime guarantees.

- Flexibility and control: Pinecone lacks the ability to control the accuracy-performance trade-off in approximate nearest neighbor searches. Pinecone seems to have only three options for controlling index accuracy; developers can use either the s1, p1, or p2 index types. This locks developers into choosing an accurate-but-very-slow index (s1) or a fast-but-not-accurate index (p2) with no options in between. In contrast, pgvectorscale can be fine-tuned to production requirements using index options. In addition, the PostgreSQL ecosystem supports multiple index types, which can, for example, speed up queries on the associated metadata or perform full-text searches. Plus, partial indexes can speed up queries on key combinations of vector and metadata searches.

Thanks to PostgreSQL with pgvector and pgvectorscale, we can all be the dev on the right:

With pgvectorscale, you can be the dev on the right and just use PostgreSQL, even for the most demanding vector workloads.

With pgvectorscale, you can be the dev on the right and just use PostgreSQL, even for the most demanding vector workloads.

For more details on pgvectorscale performance and our PostgreSQL vs. Pinecone benchmark, see our companion post on in-depth benchmark methodology and results.

(This is not our last release today, so keep your eyes peeled.

What's Your Reaction?